Best AI Tools to Generate Realistic Sad & Emotional Images (2025 Guide)

Meta description: Discover the best AI tools (2025) for generating photorealistic sad and emotional images, step-by-step prompts, ethical guidelines, post-processing tips, 3 real-world case studies, and 10+ FAQs to help artists, creators, and marketers create compelling emotional imagery responsibly.

Why this guide — and why “sad/emotional” images matter in 2025

Images that convincingly convey sadness, longing, grief, or subtle emotional states are among the most powerful visual assets a creator can use. Emotional imagery moves audiences, increases engagement, and can be central to storytelling, editorial work, campaigns, and film previsualization. In 2025, generative models have reached a level of realism where subtle facial micro-expressions, eye-glaze, tear reflections, posture and lighting can be synthesized convincingly — but doing it well still requires knowledge: the right tool, the right prompt engineering, post-processing, and, crucially, a strong ethical framework.

Recent releases from big players (Google’s image models, ByteDance’s Seedream family, Midjourney, OpenAI’s DALL·E, and Stable Diffusion-based ecosystems) have sharpened realism and editing control, and platforms like Runway and Lensa make end-to-end creation fast and iterative. These advances are exciting — and also bring new responsibilities around consent and misuse. OpenAI Community+3TechRadar+3seed.bytedance.com+3

Quick snapshot — top AI tools (2025) for realistic sad / emotional images

Below are the tools you should test if your goal is photorealistic emotional imagery. Short bullets describe why each stands out for this use case.

-

Seedream (ByteDance) — ultra-fast, high-resolution synthesis and fine-grained image editing; excels at photorealistic detail and reference consistency (good for consistent characters across scenes).

-

Google Gemini Flash Image (aka “Nano Banana”) & Mixboard — integrated into creative tools (e.g., Photoshop beta) and designed for fast, realistic output and style blending; useful when you want direct Photoshop workflows.

-

Midjourney (2025 updates) — excels in mood, atmospheric lighting, and creative character consistency features (moodboards, references) that make emotional scenes cinematic. Medium

-

DALL·E (OpenAI) — very strong at prompt-following and photorealistic faces with careful prompting; widely used in editorial contexts. OpenAI Community

-

Stable Diffusion ecosystem (SDXL, fine-tuned checkpoints) — highest flexibility (custom checkpoints, LoRAs, ControlNet) for nuanced facial expressions and post-editing; great for local/custom workflows.

-

Runway — a creative suite combining image and video generation; valuable when you want motion tests or to iterate emotional micro-expressions into short video. Runway+1

-

Lensa & mobile portrait apps — convenient for fast stylized emotional avatars and portrait filters when you work on mobile-first social content. lensa.app+1

These are starting points; which is “best” depends on required realism, editor workflow, cost, and legal constraints. TechRadar+

How emotional realism is technically achieved (short explainer)

To generate believable sadness or subtle emotions, models must render:

-

Facial micro-expressions: tiny muscle movements around eyes, brows, mouth.

-

Eye detail: wetness, tear reflections, red rim, gaze direction.

-

Posture and gesture: slump vs. upright, hands near face, clutching.

-

Lighting & color grading: cool tones, low-key lighting, rim light for cinematic isolation.

-

Contextual props & environment: rain, worn clothing, hospital room, empty bench.

-

Rendering fidelity: skin pores, specular highlights, hair strands — these orient the brain to accept an image as “real.”

Generative models now combine language understanding, multimodal conditioning (image + text), and specialized checkpoints/finetunings to target these elements. ControlNet, reference images, inpainting, and step-based prompting are the practical tools creators use to guide results. Aiarty+1

Step-by-step workflow: from title/idea to a publish-ready emotional image

Follow this reproducible workflow (works with most modern tools listed above):

Step 1 — Define intent & constraints (10 minutes)

-

Decide the emotion (e.g., “deep grief,” “quiet melancholy,” “lonely longing”). Be specific.

-

Define use case: editorial, film previsualization, advertising, social post. Legal constraints differ.

-

Choose model/workflow: seedream or Nano Banana for high realism; SDXL with ControlNet for custom fine-tune; Midjourney for atmospheric/cinematic.

Step 2 — Collect references (15–30 minutes)

-

Gather 3–6 reference photos for facial angles, lighting, costume, and environment (same person or look). Reference consistency helps models maintain believable identity and expression.

-

For copyrighted images or real people, secure rights/consent.

Step 3 — Craft the prompt (20–45 minutes)

Use layered prompting: base description → expression details → camera specs → environment → mood modifiers → negative prompts. Below is a template (expandable; examples follow later).

Prompt template (photorealistic sad portrait):

Step 4 — Choose generation method & controls (5–20 minutes)

-

Reference conditioning: upload your reference(s) and enable “image prompt” or “image-to-image” mode.

-

ControlNet / depth maps: for pose control or preserving composition.

-

Face restoration / upscaling: use built-in or external tools (GFPGAN, Real-ESRGAN).

Step 5 — Iterate (15–60+ minutes)

-

Run 3–10 variations, tweak prompts:

-

Tighten “expression” words (e.g., “eyes half-closed and glistening, micro-tremor in lower lip”).

-

Add camera terms for realism.

-

Add negatives for artifacts (“no extra fingers, no deformity, avoid toothy smile”).

-

-

Blend outputs or use inpainting to correct small issues.

Step 6 — Post-processing (20–60 minutes)

-

Color grade for cohesive tone (e.g., split toning, desaturation of midtones).

-

Subtle dodge & burn to emphasize eye wetness and shadows.

-

Add film grain and slight chromatic aberration for realism.

-

Run face-aware restoration only if needed to avoid over-smoothing.

Step 7 — Legal & ethical check (5–15 minutes)

-

Confirm no real person’s likeness is used without consent.

-

Be careful with images that could be emotionally manipulative (e.g., depicting victims).

-

Add disclaimers where required. (More on ethics later.)

Detailed prompt library — ready-to-use prompts (adapt to your tool)

Below are modular prompts you can paste into any capable generator. Replace bracketed parts for customization.

1) Cinematic sad portrait (photorealistic)

2) Emotional long-shot (loneliness)

3) Subtle micro-expression study (for editorial)

4) Family grief scene (group emotion)

Post-processing recipes that improve emotional realism

-

Eye focus & wetness: dodge the tear highlights and increase micro-contrast around tear reflections; add a tiny specular point to the tear to mimic light reflection.

-

Skin micro-variations: avoid oversmoothing; maintain pores and tiny redness near eyes (subtle) to hint at crying.

-

Color grading: cool midtones, warmer highlights — creates cinematic melancholic tone. Use split toning: shadows -10 hue towards blue, highlights +8 towards warm.

-

Grain & texture: very subtle film grain reintroduces imperfection and believability.

-

Sharpen selectively: eyes and tear area sharpened slightly; avoid global oversharpening.

-

Composite for narrative: add elements like rain streaks on a window, torn photograph edges, or a headline overlay in editorial uses (but only with explicit disclosure if representing real people).

Case studies — 3 real-world examples (mini)

Case study A — Editorial feature: “After the Storm”

A magazine needed a photorealistic portrait series illustrating grief without using real subjects. The creative team used Seedream for initial synthesis because of fast high-res rendering and reference consistency across characters. Prompts specified micro-expressions and consistent lighting across five images. Final images were refined in Photoshop (spot inpainting, color grade). Result: a six-page spread with cohesive mood and no legal consent issues because no real person was represented. seed.bytedance.com

Case study B — Short film previsualization

A director used Runway to generate emotional stills to pitch a short film. Runway’s video iteration and image-to-image features allowed the team to test subtle changes in actor expressions across frames to plan direction for real actors. Output served as a visual bible for casting and lighting. Runway

Case study C — Social awareness campaign

A nonprofit wanted to illustrate loneliness for a mental health campaign. They used SDXL with a custom emotional LoRA model (trained on licensed editorial references) to generate a consistent protagonist across assets (banner, poster, short clip). They included an ethics statement and resources. Post-processing emphasized eye detail and added shallow film grain for authenticity. Cubix

Ethical & legal checklist (must-read)

Generating emotional images is powerful and sensitive. Before publishing:

-

Consent & likeness: don’t base images on real persons without explicit consent. If you must, have signed releases.

-

Misrepresentation: don’t depict a real private event or claim the image is of a real person (label as “AI-generated” where necessary).

-

Trauma sensitivity: avoid exploiting images of victims or trauma for clickbait; consult stakeholders.

-

Copyright: check model/data provenance where possible — some models may be trained on copyrighted photos. Use tools and platforms that surface licensing. TechRadar+1

-

Platform policies: review the platform’s terms (some restrict realistic faces, minors, or look-alikes).

-

Disclosures: in ads/editorial, disclose AI generation if it may mislead.

-

Local law compliance: deepfake, likeness, and privacy laws vary by jurisdiction (consult legal counsel for sensitive use).

Tips & advanced tricks for pro-level emotional output

-

Use multiple references: upload 3–4 reference images showing the same subject from different angles and under varied lighting — helps maintain identity consistency.

-

Layer prompts: first generate base mood shot; then use inpainting to correct eye detail and tear placement.

-

Use camera & film language: specifying lens, aperture, film stock (e.g., “85mm f/1.8, Kodak Portra, cinematic grade”) pushes models to realistic outputs.

-

Negative prompts: be explicit about avoiding common artifacts (“no extra fingers, no deformed ears, no text overlays, no watermark”).

-

Face-aware restoration: apply cautiously — too much smoothing kills micro-expressions.

-

Blend generators: try the same prompt on two different models, then composite the best elements (e.g., Seedream skin + SDXL eyes).

-

Human in the loop: always curate and tweak — fully automatic outputs rarely meet final-quality expectations for subtle emotion.

Tool selection: which to choose and when

-

Fast iteration + Photoshop integration: Google Nano Banana / Gemini (via Photoshop beta). Use for rapid concepting inside Photoshop. TechRadar

-

Highest photorealism & reference consistency: Seedream (ByteDance) — great for editorial and large batches where detail matters. seed.bytedance.com+1

-

Cinematic mood & community styles: Midjourney — strong for atmosphere, moodboards, and stylized truthiness. Medium

-

Customizable & locally-run pipelines: Stable Diffusion (SDXL + LoRAs + ControlNet) — best if you need private, repeatable, and tunable results. Aiarty

-

Video + motion tests: Runway — use when you want to test expression changes over time. Runway

-

Mobile-first portrait edits: Lensa — quick stylized portraits and avatar packs. lensa.app

10+ Frequently Asked Questions (FAQs)

Q1: Can AI generate a perfectly realistic portrait of a real person?

A1: Technically yes — generative models can reproduce realistic faces. But using a real person’s likeness without consent can be illegal or ethically wrong. Use consent forms or use synthetic, non-identical faces. TechRadar

Q2: Which model makes the most realistic tears and eye wetness?

A2: Effectiveness varies by prompt and model — Seedream and SDXL checkpoints with targeted inpainting tend to render convincing tear reflections; prompt emphasis on “specular highlight on tear” helps. seed.bytedance.com+1

Q3: Are there ready-made “emotion” LoRAs or model checkpoints?

A3: Yes — the Stable Diffusion ecosystem hosts emotion-focused checkpoints and prompt packs, but check licensing and provenance before using commercially. Aiarty

Q4: How do I avoid the uncanny valley?

A4: Keep micro-details realistic (skin pore, hair strands), avoid over-perfection, use subtle imperfections (small stray hairs, skin texture), and prioritize authentic lighting. Composite human retouching slowly. OpenArt

Q5: What camera settings in a prompt work best?

A5: Use standard portrait specs: 85mm (or 50–85mm) lens, f/1.4–f/2.8 for shallow DOF, natural ISO values (100–400), and cinematic shutter hints. This helps models simulate realistic depth/blur. Medium

Q6: Should I always disclose images as AI-generated?

A6: Best practice is to disclose when the image could mislead viewers (especially editorial, political, or documentary contexts). Always follow platform rules and local laws. TechRadar

Q7: Can I train my own emotional-expression model?

A7: Yes. Fine-tuning SDXL or building a LoRA on licensed emotional-expression datasets is common. This requires compute and careful ethics review. Aiarty

Q8: How do I get consistent characters across multiple images?

A8: Use reference images, seed control, and models that support multiple references or “character consistency” features (some platforms provide character stacks or moodboard features). Medium+1

Q9: Which tool is best for editorial photos (magazine-quality)?

A9: Seedream and SDXL-based pipelines produce magazine-grade results with the necessary inpainting and post-processing. Midjourney can be used for mood exploration. seed.bytedance.com+1

Q10: Will social platforms detect AI images?

A10: Platforms are developing detection and labeling tools; detection accuracy varies. Even if detection fails, you should still follow disclosure and ethical rules. TechRadar+1

Q11: Are there model-specific tips for sad images?

A11: Yes — for Midjourney, lean into moodboard/style references and use the community “–stylize” parameters to dial atmosphere; for SDXL use ControlNet for pose/face alignment; for Seedream, use multiple references to preserve identity across shots. Medium+1

Troubleshooting common problems

-

Artifacted tears or glossy eyes that look fake: increase resolution, run a focused inpainting on the tear area, add tiny specular highlight.

-

Face looks “plastic”: reduce face-restoration strength, reintroduce skin pores, add micro-contrast, and avoid extreme smoothing.

-

Inconsistent character between images: use a single consistent reference, seed, and, where available, the model’s character lock or sref/cref features.

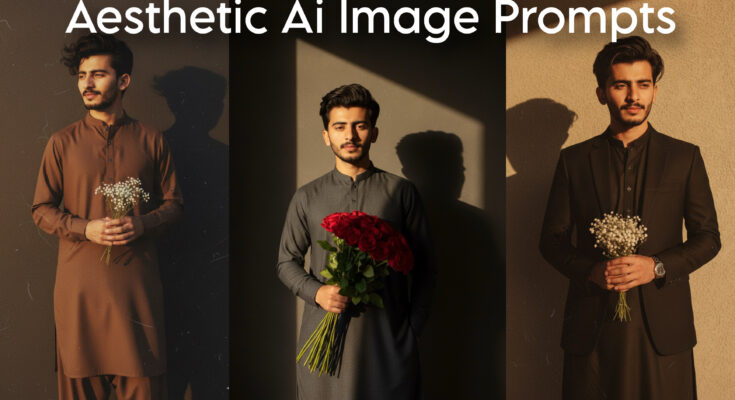

Prompt;

“Ultra-realistic cinematic portrait of a stylish young man standing against a dark minimal background with a soft golden sunlight beam casting dramatic shadows.He is wearing a black Kurta with one hand casually in his pocket and the other holding a bouquet of red roses close to his chest. His hair is voluminous and slightly tousled, styled naturally with texture. His expression is serene and confident, eyes closed, basking in the warm sunlight. The composition highlights the play of light and shadow, with a strong shadow silhouette falling on the wall behind him. The mood is romantic, artistic, and editorial – capturing both sophistication and vulnerability.Shot in high resolution, with cinematic tones, sharp details, and soft contrast”

Generate

SEO & publishing checklist for your blog or portfolio page

-

H1: Title (your main keyword must appear).

-

H2: Use subheadings for each major section (e.g., Tools, Workflow, Ethics).

-

Include alt text for images (describe emotion & generation method).

-

Add schema for articles and images if possible.

-

Include read time, CTA, and a short meta description (see below).

-

Provide disclosure for AI-generated images and a short note about model provenance.

-

Include keywords naturally: “AI emotional images 2025,” “generate sad portraits AI,” “photorealistic grief portrait AI,” etc.

Suggested focus keywords (comma-separated):

AI emotional images 2025, generate sad images AI, photorealistic sad portrait AI, Seedream emotional images, Nano Banana portraits, Midjourney emotional art, SDXL sad portrait prompts.

Final thoughts & best practices (short)

Realism in sad and emotional images is now accessible to creators — but good outcomes come from a combination of the right tool, carefully crafted prompts, reference images, patient iteration, and a responsible ethical approach. Use the tool that fits your workflow, and never skip the legal/consent checklist when real identities or sensitive contexts are involved.